The False Promise of AI in Manufacturing

Why AI tools like ChatGPT fail in manufacturing contexts and what it takes to build AI systems that actually work in complex industrial environments.

There's a particular kind of logic that has taken over many boardrooms in manufacturing: since large language models like ChatGPT can generate emails, write summaries, and answer trivia, among other things, they should be able to revolutionize the way engineers solve real-world problems, too. But that reasoning relies on several premises that aren't true.

Now, suppose you've tried asking ChatGPT or Gemini to solve a problem. In that case, you've probably had this experience: You paste in a document, ask a question, and it gives you a cheerful, overly confident answer … that completely misses the point.

This isn't because ChatGPT is bad. It's because it doesn't understand context.

It doesn't know that one document is a calibration log and another is a PowerPoint summarising a failed root cause investigation. It doesn't remember that you changed suppliers six months ago. It doesn't know a lot of things.

In practice, large language models (LLMs) agree and please rather than provide meaningful pushback or something close to critical analysis. LLM-based chatbots tend to avoid disagreeing in their responses, often parroting back vague and agreeable replies. They don't understand what your data means. And without meaning, all you get is answers that sound right, but aren't useful.

Engineering questions require domain-specific insight and reasoning across complex systems. An AI tool that gives feel-good answers is not beneficial when a production line stops unexpectedly or a defect in material design threatens the integrity of your product.

And yet, many organizations continue to throw money at "AI transformation" without building the infrastructure or the understanding required to make it work.

Your Data Troubles

This is the paradox: companies are investing in "cutting-edge" AI while the majority of their information is still trapped in PDFs in some folder somewhere.

Manufacturing data isn't just in tables and spreadsheets. It's in PDF manuals, scribbled maintenance notes, operator checklists, emails from Bob in quality control, and that one PowerPoint from 2018 that everyone forgot existed. This is what we call 'unstructured data.'

In fact, according to recent data from Statista, over 80% of enterprise data is unstructured. And yet, most AI tools are barely scratching the surface of it. Worse, many companies aren't even sure where all their unstructured data lives. It's spread across Google Drive folders, or outdated servers and/or tools across several laptops. Some of it is misnamed or duplicated. Much of it is completely inaccessible. You get the idea.

What Needs to Change

So what would it actually look like to use AI in a way that works?

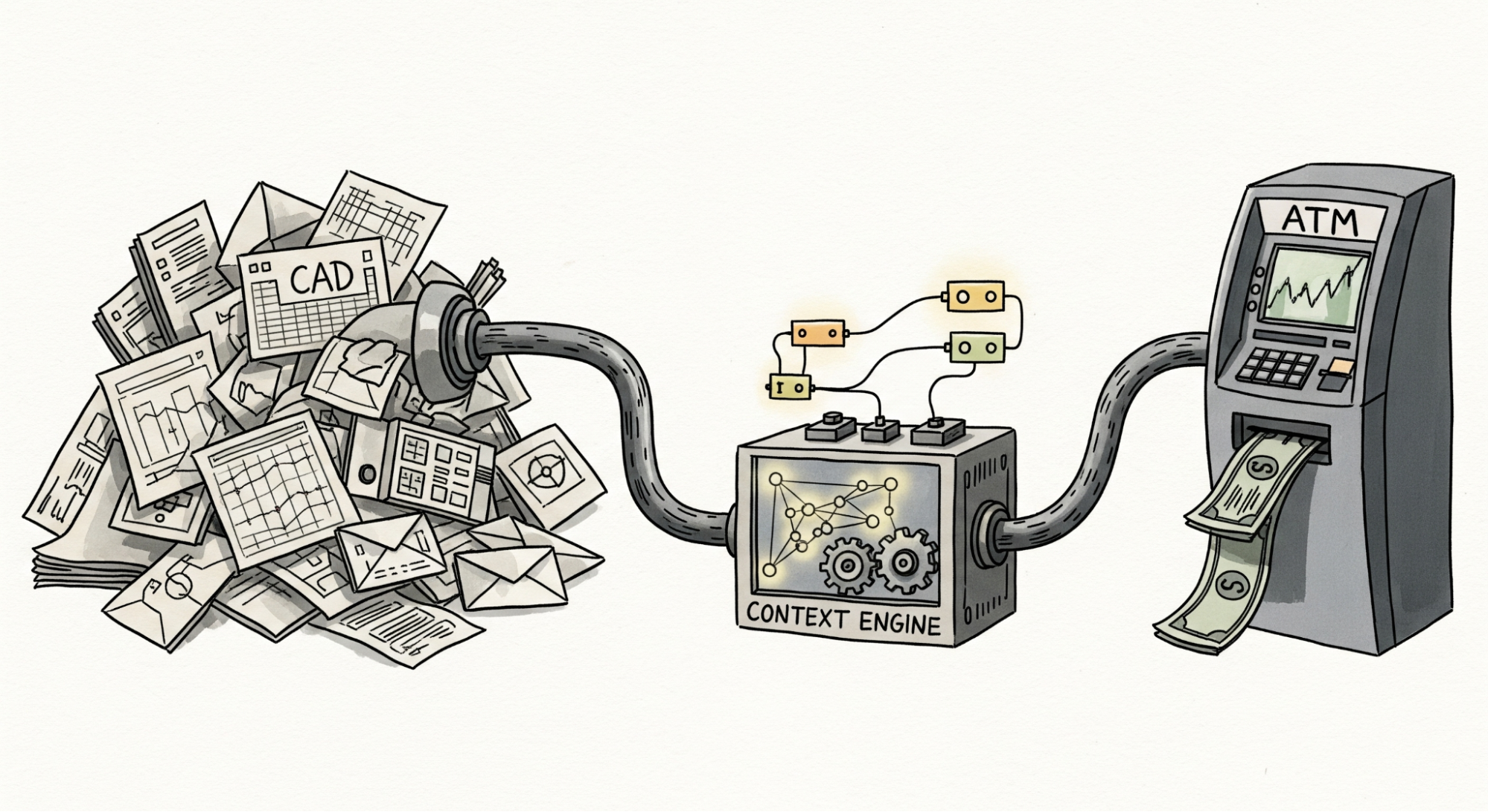

It begins with thinking of AI as an interface between humans, data, and decision-making. The quality of the insights it provides depends entirely on the context you feed it.

To get real value from AI in manufacturing:

Understand your data, especially the unstructured knowledge you've accumulated over time. There's a treasure trove of operational context hiding in maintenance logs, PDFs, emails, and legacy documents. This is the basis of the context you will be providing to the AI.

Connect the dots with context graphs. Extracting text on its own is not sufficient. You need to map relationships between the information within the text. You must be able to connect a failure mode to a material specification and ultimately a supplier. This is where knowledge graphs come in; they link structured and unstructured data into information chunks and relationships that AI can reason over.

Embedding this graph into every AI interaction. By feeding the graph into your AI tools, you're grounding its output in your real-world operations. It also enables complex reasoning by linking information that was once scattered far across documents and data silos. AI can't think critically if it doesn't know how your factory works or what matters to your team.

What we are trying to say here isn't that AI doesn't work; it's that AI doesn't work without context. Manufacturing is too complex and too high-stakes for generic tools and vague answers. If you want meaningful results, you need to start by structuring your knowledge, connecting it with purpose, and feeding your AI the full picture.

Why We Are Building in the Open

We set out to solve a problem we kept seeing over and over again: smart teams in manufacturing, stuck with disconnected data and generic AI tools that didn't understand the context. We're documenting our work not because we have all the answers, but because we believe others in the industry are facing the same challenges. We think solving them is too important to do behind closed doors; that's why this is the first of many articles demystifying AI in manufacturing. If you're trying to bring structure to your own data chaos or make AI useful in real-world operations, you're not alone. We're on the same path.

We are on a mission to make Anvil the best tool to make sense out of manufacturing data and usher the AI revolution as we all imagined it. Anvil was built to offer a different approach. It respects the complexity of manufacturing, the value of context, and the limits of large language models.

If there's one lesson we can take from the growing list of failed AI projects, it's this: You cannot solve a knowledge infrastructure problem with a language tool. You cannot fix decades of unstructured sprawl with a chatbot. And you cannot expect meaningful answers from a system that has no understanding of your data or your goals.